Supercomputing Frontiers Europe 2019

Keynote speakers

Featured evening event speaker

Invited speakers

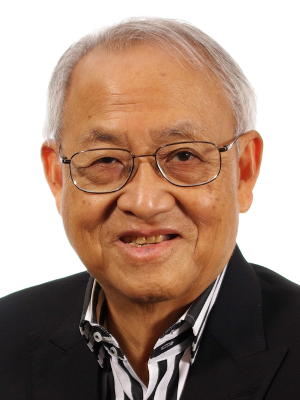

RupakBiswas

NASA Ames Research Center, USA

Title:Advanced Computing at NASA

Dr. Rupak Biswas is currently the Director of Exploration Technology at NASA Ames Research Center, Moffett Field, Calif., and has held this Senior Executive Service (SES) position since January 2016. In this role, he in charge of planning, directing, and coordinating the technology development and operational activities of the organization that comprises of advanced supercomputing, human systems integration, intelligent systems, and entry systems technology. The directorate consists of approximately 700 employees with an annual budget of $160 million, and includes two of NASA’s critical and consolidated infrastructures: arc jet testing facility and supercomputing facility. He is also the Manager of the NASA-wide High End Computing Capability Project that provides a full range of advanced computational resources and services to numerous programs across the agency. In addition, he leads the emerging quantum computing effort for NASA. Dr. Biswas received his Ph.D. in Computer Science from Rensselaer in 1991, and has been at NASA ever since. During this time, he has received several NASA awards, including the Exceptional Achievement Medal and the Outstanding Leadership Medal (twice). He is an internationally recognized expert in high performance computing and has published more than 160 technical papers, received many Best Paper awards, edited several journal special issues, and given numerous lectures around the world.

Leon Chua

University of California Berkeley, USA

Tentative Title: Memristor – Remembrance of things Past

Leon Chua is widely known for his invention of the Memristor and the Chua’s Circuit. His research has been recognized internationally through numerous major awards, including 17 honorary doctorates from major universities in Europe and Japan, and 7 USA patents. He was elected as Fellow of IEEE in 1974, a foreign member of the European Academy of Sciences (Academia Europea) in 1997, a foreign member of the Hungarian Academy of Sciences in 2007, and an honorary fellow of the Institute of Advanced Study at the Technical University of Munich, Germany in 2012. He was honored with many major prizes, including the Frederick Emmons Award in 1974, the IEEE Neural Networks Pioneer Award in 2000, the first IEEE Gustav Kirchhoff Award in 2005, the International Francqui Chair (Belgium) in 2006, the Guggenheim Fellow award in 2010, Leverhulme Professor Award (United Kingdom) during 2010-2011, and the EU Marie curie Fellow award, 2013.

Prof. Chua is widely cited for the 12 hugely popular lectures he presented at the hp Chua Lecture Series, entitled “From Memristors and Cellular Nonlinear Networks to the Edge of Chaos”, during the fall of 2015, and now accessible through YouTube via the link.

Paul Messina

Argonne National Laboratory, USA

Tentative Topic: Exascale Supercomputing

Paul Messina is Director of Argonne National Laboratory’s Computational Science Division and an Argonne Distinguished Fellow. During 2015-2017, he served as Project Director for the U.S. Department of Energy Exascale Computing Project. From 2008-2015, he was Director of Science for the Argonne Leadership Computing Facility.

From 1987-2002, he was founding Director of the Center for Advanced Computing Research at the California Institute of Technology (Caltech), PI for the CASA gigabit network testbed, Chief Architect for NPACI, and co-PI for the National Virtual Observatory and TeraGrid. In 1990, he conceived and led the Concurrent Supercomputing Consortium that created and operated the Intel Touchstone Delta, at the time the world’s most powerful scientific computer and in 1999-2000 led the DOE-NNSA ASCI program while on leave from Caltech.

During 1973-1987, he held a number of positions in the Applied Mathematics Division and was founding Director of the Mathematics and Computer Science Division at Argonne.

Taisuke Boku

University of Tsukuba, Japan

Title: What’s the next step of accelerated supercomputing?

Taisuke Boku received Master and PhD degrees from Department of Electrical Engineering at Keio University. After his career as assistant professor in Department of Physics at Keio University, he joined to Center for Computational Sciences (former Center for Computational Physics) at University of Tsukuba where he is currently the deputy director, the HPC division leader and the system manager of supercomputing resources. He has been working there more than 20 years for HPC system architecture, system software, and performance evaluation on various scientific applications. In these years, he has been playing the central role of system development on CP-PACS (ranked as number one in TOP500 in 1996), FIRST (hybrid cluster with gravity accelerator), PACS-CS (bandwidth-aware cluster) and HA-PACS (high-density GPU cluster) as the representative supercomputers in Japan. Also, He is a member of system architecture working group of Post-K Computer development. He is a coauthor of ACM Gordon Bell Prize in 2011.

Abstract

In this talk, I will present our current research on advanced hybrid cluster computing with the latest FPGA technology to provide flexible and power-effective accelerated computing in next generation. We apply FPGA technology not just for computation but also for interconnection for large scale parallel systems without communication bottleneck. The concept is based on the high performance optical interconnection interface suited in the latest version of FPGA with up to 100Gbps speed on multiple communication ports. We have been developing a prototype system of such a concept named AiS (Accelerator in Switch) to gather the computation and communication on FPGA device with flexible programming based on OpenCL instead of traditional Verilog HDL, for user friendly FPGA programming.

Beside of FPGA use in clusters, we still depend on GPU with its excellent performance on problems or partial problems Where it works well. In some applications, there is parts where there is not enough parallelism to use GPU efficiently or irregular computation not fit to GPU’s SIMT computation manner to cause the performance bottleneck especially on strong scaling. Therefore, we introduce GPU and FPGA together as different type of accelerators.

In this talk, the brief image and prototype design/implementation of AiS-based system developed in Center for Computational Sciences, University of Tsukuba is presented. And our new machine based on this concept which will be delivered on March 2019, named Cygnus, is introduced and several preliminary performance evaluation on actual applications is presented.

Hamish Carr

University of Leeds, UK

Title: Why Topology is Necessary at Exascale – And Why it’s Not Easy

Dr. Hamish Carr is a Senior Lecturer in the School of Computing at the University of Leeds, and is one of the principal researchers in the field of computational topology and the mathematical foundations of scientific visualization. His thesis work included the standard efficient serial algorithm and visualizations based on the topological structure called the contour tree, and he has been involved in developing, refining and applying these tools to a variety of data analytic problems ever since. He has also worked on accelerations to direct volume rendering, improvements in computational statistics for visualization, and tools for direct visualization of two or more functions rather than the single function normally assumed. In recent years, he has been collaborating with Los Alamos and Lawrence Berkeley National Laboratories on developing topological tools for shared-memory and distributed parallel machines, and has contributed to the new vtk-m multicore visualization toolkit under the DoE ALPINE project.

Abstract

As computation scales to exabyte levels, humans are increasingly unable to process the data directly. In a realistic assessment, a human might meaningfully perceive 10 GB of data in a lifetime, which requires billion-fold data reduction without information loss. As a result, techniques like computational topology, with mathematically well-founded approaches, become increasingly necessary to analyse and display (i.e. visualise) extreme data sets. However, much of the mathematics on which these methods are based is either linearly defined or couched in serial metaphors. As a result, one of the major challenges to exascale comprehension is now the ability to scale computational topology to massive hybrid systems using both shared-memory and distributed parallelism. This talk will therefore give an overview of the basic ideas behind computational topology at scale, and illustrate recent approaches to scalability.

David Coster

Max Planck Institute for Plasma Physics

Title: Uncertainty at the Exa-Scale: the development of validated multi-scale workflows that quantify uncertainty to produce actionable results

David Coster obtained his Ph.D. in plasma physics at Princeton University in 1993. Since then he has been at the Max Planck Institute for Plasma Physics. His research interests include edge plasma physics in tokamaks, the building of work-flows to solve problems that would be intractable using a monolithic code and the use of High Performance Computers for such workflows.

Abstract

The VECMA project (funded by the European Union’s Horizon 2020 research and innovation programme under grant agreement No 800925) aims to enable a diverse set of multiscale, multiphysics applications — from fusion and advanced materials through climate and migration, to drug discovery and the sharp end of clinical decision making in personalised medicine — to run on current multi-petascale computers and emerging exascale environments with high fidelity such that their output is “actionable”. That is, the calculations and simulations are certifiable as validated (V), verified (V) and equipped with uncertainty quantification (UQ) by tight error bars such that they may be relied upon for making important decisions in all the domains of concern. The current status of the “fast-track” applications will be covered, as well as the progress in developing an open source toolkit for multiscale VVUQ based on generic multiscale VV and UQ primitives.

Evangelos Eleftheriou

IBM Research Labs Zurich, Switzerland

Title: “In-memory Computing”: Accelerating AI Applications

Evangelos Eleftheriou, received his Ph.D. degree in Electrical Engineering from Carleton University, Ottawa, Canada, in 1985. In 1986, he joined the IBM Research – Zurich laboratory in Rüschlikon, Switzerland, as a Research Staff Member. Since 1998, he has held various management positions and is currently responsible for the neuromorphic computing activities of IBM Research – Zurich. His research interests include signal processing, coding, non-volatile memory technologies and emerging computing paradigms such as neuromorphic and in-memory computing for AI applications. He has authored or coauthored over 200 publications, and holds over 160 patents (granted and pending applications). In 2002, he became a Fellow of the IEEE. He was co-recipient of the 2003 IEEE Communications Society Leonard G. Abraham Prize Paper Award. He was also co-recipient of the 2005 Technology Award of the Eduard Rhein Foundation. In 2005, he was appointed an IBM Fellow. The same year he was also inducted into the IBM Academy of Technology. In 2009, he was co-recipient of the IEEE CSS Control Systems Technology Award and of the IEEE Transactions on Control Systems Technology Outstanding Paper Award. In 2016, he received an honoris causa professorship from the University of Patras, Greece. In 2018, he was inducted into the US National Academy of Engineering as Foreign Member.

Abstract

In today’s computing systems based on the conventional von Neumann architecture, there are distinct memory and processing units. Performing computations results in a significant amount of data being moved back and forth between the physically separated memory and processing units. This costs time and energy, and constitutes an inherent performance bottleneck. It is becoming increasingly clear that for application areas such as AI (and indeed cognitive computing in general), we need to transition to computing architectures in which memory and logic coexist in some form. Brain-inspired neuromorphic computing and the fascinating new area of in-memory computing or computational memory are two key non-von Neumann approaches being researched. A critical requirement in these novel computing paradigms is a very-high-density, low-power, variable-state, programmable and non-volatile nanoscale memory device. There are many examples of such nanoscale memory devices in which the information is stored either as charge or as resistance. However, one particular example is phase-change-memory (PCM) devices, which are very well suited to address this need, owing to their multi-level storage capability and potential scalability.

In in-memory computing, the physics of the nanoscale memory devices, as well as the organization of such devices in cross-bar arrays, are exploited to perform certain computational tasks within the memory unit. I will present how computational memories accelerate AI applications and will show small- and large-scale experimental demonstrations that perform high-level computational primitives, such as ultra-low-power inference engines, optimization solvers including compressed sensing and sparse coding, linear solvers and temporal correlation detection. Moreover, I will discuss the efficacy of this approach to efficiently address not only inferencing but also training of deep neural networks. The results show that this co-existence of computation and storage at the nanometer scale could be the enabler for new, ultra-dense, low-power, and massively parallel computing systems. Thus, by augmenting conventional computing systems, in-memory computing could help achieve orders of magnitude improvement in performance and efficiency.

Anne C. Elster

Norwegian University of Science and Technology, Norway

Title: Supercompting and AI: Impact and Opportunities

Dr. Anne C. Elster (PhD Cornell Univ. 1984) , is a Professor at the Department of Computer Science (IDI) at Norwegian Univ. of Science & Technology (NTNU) in Trondheim Norway, where she established the IDI/NTNU HPC-Lab, a well-respected research lab in heterogeneous computing. She also holds a current Visiting Scientist position at the Univ. of Texas at Austin, USA.

Her current research interests are in high-performance parallel computing, currently focusing on developing good models and tools for heterogeneous computing and parallel software environments including methods that applying machine learning for code optimization and image processing, as well as developing parallel scientific codes that interact visually with the users by taking advantage of the powers in modern GPUs. Her novel fast linear bit-reversal algorithm from 1987 is still noteworthy.

She has given several invited talks and has been active participant and committee member of ACM/IEEE SC (Supercomputing) since 1990. She also served on MPI 1&2, is a Senior member of IEEE, Life member of AGU (American Geophysical Unions, as welland as a member of ACM, SIAM and Tekna. Funding partners/collaborators include EU H2020, The Research Council of Norway, AMD, ARM, NVIDIA, Statoil and Schlumberger.

Abstract

Supercomputing is still needed in order to be able to get the desired performance for computational intensive tasks ranging from Big Data to astro-physics and weather simulations. Traditionally the HPC field drove companies like Cray, IBM and others to develop processors for supercomputing. However, the market forces in other fields have since the proliferation of COTS (commercial off-the shelf) processors, including GPUs for gaming and now more recently AI, driven the innovation in processor design, This means that algorithms, tools and applications now should adapt and take advantage of tensor processors, Machine Learning techniques, and other related technology, rather than expect that old computational models with hold true. As will be discussed in this talk, this also an opportunity to help develop better graph algorithms for AI as well as apply some of the techniques from AI to HPC challenges.

Wojciech Grochala

University of Warsaw, Poland

Topic: Chemistry with supercomputers

Wojciech Grochala (b.1972) studied chemistry at the University of Warsaw (Poland) and received his Ph.D. in molecular spectroscopy under the supervision of Jolanta Bukowska. After postdoctoral work in theoretical chemistry with Roald Hoffmann (Cornell, US), and in experimental inorganic and materials chemistry with Peter P. Edwards (Birmingham, UK) he returned to Poland. He obtained his habilitation at the University of Warsaw in 2005, and in 2011 he was appointed Full Professor. Wojciech Grochala received the Kosciuszko Foundation Fellowship (US), Royal Society of Chemistry Postdoctoral and Research Fellowships (UK), The Crescendum est Polonia Foundation Fellowship (Poland), and Świętosławski Prize 2nd degree (Polish Chem. Soc., Warsaw section). In 2014 he was granted titular professorship from the President of Poland. Since 2005 Grochala heads the Laboratory of Technology of Novel Functional Materials, currently with some 30 group members. He has published more than 150 papers in reputed journals.

Abstract

Chemistry constantly evolves but these days – more than ever before – it develops horizontally. As major principles underlying chemistry have already been determined, pleasant surprises come not from new phenomena but mostly from the known phenomena being discovered in unprecedented systems – or, interestingly, in those, which have been known for a very long time, but had not been explored sufficiently well. The great variety of chemical systems makes it difficult to choose which systems are worth studying.

For nearly two decades we have been looking at an exotic family of compounds bearing silver and fluorine; these systems were predicted – based on quantum-mechanical calculations – to exhibit substantial valence orbital mixing (covalence) and a range of fascinating phenomena including high-TC supercondutivity (conduction of electric current without resistance) as well as record strong magnetic interactions. More recently, fast progress of computing resources @ ICM UW permitted us to confirm these early predictions using more precise computational methods as well as confront them with experimental findings, with fascinating outcome, which I will describe during the talk.

Torsten Hoefler

ETH Zürich, Switzerland

Tentative Topic: Extreme Scale Graphs

Torsten is an Associate Professor of Computer Science at ETH Zürich, Switzerland. Before joining ETH, he led the performance modeling and simulation efforts of parallel petascale applications for the NSF-funded Blue Waters project at NCSA/UIUC. He is also a key member of the Message Passing Interface (MPI) Forum where he chairs the “Collective Operations and Topologies” working group. Torsten won best paper awards at the ACM/IEEE Supercomputing Conference SC10, SC13, SC14, EuroMPI’13, HPDC’15, HPDC’16, IPDPS’15, and other conferences. He published numerous peer-reviewed scientific conference and journal articles and authored chapters of the MPI-2.2 and MPI-3.0 standards. He received the Latsis prize of ETH Zurich as well as an ERC starting grant in 2015. His research interests revolve around the central topic of “Performance-centric System Design” and include scalable networks, parallel programming techniques, and performance modeling. Additional information about Torsten can be found on his homepage at htor.inf.ethz.ch.

Yoshinori Kimura

Infinite Curation, Japan

Title: Sequence Similarity Search for Large-scale Metagenomic Data using Liquid Immersion Cooling Supercomputer

Yoshinori Kimura is a Senior Software Engineer of Infinite Curation (Seconded from group company, ExaScaler). He has 15 years experience of image processing, volume visualization and customer oriented application in medical field.

Mr. Kimura started his career at TeraRecon as co-creator of AquariusNET, the world’s first commercial streaming, thin-client, enterprise 3D imaging server. After the design and development of basic framework of Aquarius iNtuition, which is next generation of AquariusNET, he concentrated to develop more academic function such like MRI ejection fraction, flow, tractography analysis. The radiologists and clinicians highly evaluated the ease of use and stability of both AquariusNET and Aquarius iNtuition, thousands of systems are installed in the world.

Previously Mr. Kimura developed interface between cloud service and quantum computer simulator on PEZY-SCnp/ZettaScaler1.6 system at ExaScaler since he has experience of parallel processing systems in his postgraduate course. And he seconded to Infinite Curation which is group company of ExaScaler to develop medical/genomic application onto PEZY-SC/ZettaScaler system. His design and analysis skills obtained in his experience, flexibility to learn various fields are suitable for this purpose. He received the Bachelor’s degree in Mechanical Engineering, and the Master’s degree in Information Science from Tohoku University in 1998 and 2000.

Abstract

We have developed a novel sequence similarity search tool “PZLAST” on liquid immersion cooling supercomputer ZettaScaler-2.2 which consists of multiple MIMD many-core processor PEZY-SC2s.

Since the cost of DNA sequencing is reducing, the studies in metagenomic analysis of skin, mouth, gut, soil and water are growing. Due to accumulating the knowledge in various metagenomes obtained from these studies, the size of public metagenomic database is dramatically increasing. It gives researchers many opportunities to exhaustive search for large-scale database and new business capabilities by using shotgun metagenome sequencing. BLAST is a famous high sensitive similarity search tool for metagenomic analysis, however increasing the database and/or sequencing datasize requires longer search time. To speed up it, CLAST was developed on GPU with parallelizing the BLAST algorithm effectively and achieved 80x faster than the sequential BLAST algorithm remaining the high sensitivity of it.

Following the functionality and parallelizing concept of CLAST, PZLAST improves the calculation speed utilizing the MIMD feature of PEZY-SC2 and includes protein vs. protein sequence similarity search which is not supported in CLAST. It also introduces parallel processing of large-scale data on ZettaScaler-2.2. As a result, PZLAST achieves 237-fold scalability by using 256 PEZY-SC2s.

Tomasz Kościółek

University of California San Diego, USA

Tentative Title: Computational challenges in understanding the structure and function of the microbiome

Tomasz Kościółek is a postdoctoral research associate in Rob Knight’s Lab at the University of California San Diego. He holds an MSc in chemistry (2010) from the Jagiellonian University in Kraków, Poland; and a PhD in biological sciences (2015) from University College London, United Kingdom. His doctoral work was focused on the application of sequence covariation methods for protein structure prediction and computational predictions of dynamic properties in intrinsically disordered proteins. Since he joined the Knight Lab, he focuses on large-scale predictions of the structure and function of microbial proteins (lead researcher on Microbiome Immunity Project – collaboration involving IBM World Community Grid), the development of scalable computational methods for microbiome analyses (e.g. QIIME 2 and Qiita) and studies of the role of the microbiome in health and disease, especially in the context of mental health.

Alex Wright-Gladstein

Ayar Labs, USA

Title: Optical communications for high-bandwidth chip I/O in computing systems

Alex Wright-Gladstein is co-founder of Ayar Labs, a company that is making computing faster and more energy efficient by using light to move data between chips instead of electricity. She founded the company while at MIT, where she met the inventors of the first processor to communicate using light. Prior to Ayar Labs, Alex was the Energy Entrepreneurship Practice Leader at MIT, an Energy Markets Specialist and Program Manager at EnerNOC, the first smart grid company, and the founder of Everyday Science.

Per Öster

CSC – IT Center for Science Ltd, Finland

Title: Finnish HPC Roadmap and plans for EuroHPC

Per Öster is Director at CSC where he leads the Research Infrastructure and Policy unit. The unit is managing the relations with major research infrastructures and have responsibility for CSC’s and Finland’s participation in e-infrastructures and major initiatives such as EGI , European Open Science Cloud, EUDAT, Research Data Alliance (RDA), PRACE, and hosting of the Finnish node of the bio-computing infrastructure ELIXIR. Öster is one of the founders of EGI and was its first Council chair. He is presently chair of the board of directors of EUDAT Ltd and represents also Finland and CSC on the board of ELIXIR. Further he represents CSC in Knowledge Exchange, a partnership to promote open scholarship and improve services for higher education and research in Europe.

Per Öster has more than 20 years of experience in computational science from both academia and industry. He has a background in theoretical atomic physics and received, in 1990, a doctorate in physics from the Department of Physics, University of Gothenburg/Chalmers University of Technology.

Michael Reimann

École polytechnique fédérale de Lausanne, Switzerland

Title: Studying brain structure and function through detailed computational modelling and topological analysis

Michael Reimann is a senior staff scientist in the Blue Brain Project at the EPFL, Switzerland. For his doctoral research he developed algorithms to derive microcircuit connectivity and study the emergence of microcircuit activity.

He is one of the main contributors of digital microcircuit modeling and simulation efforts in the Blue Brain Project. His research is focused on synaptic connectivity at all scales, how it is shaped by plasticity mechanisms and how this in turn determines brain function. To this end he employs advanced simulation tools on massively parallel supercomputing systems and develops novel analyses, based on classical information theory and algebraic topology.

Abstract

The function of the brain is thought to be determined by the structure of its synaptic connections. Unfortunately, the study of the nature of this relation has been hampered by an incomplete knowledge about the synaptic wiring of neurons on the local scale of a microcircuit or brain region. Through the use of massively parallel supercomputing architectures, we were able to build, simulate and analyze the most detailed model of a rat brain region to date, that is based on the available biological data and principles, and extensively validated. Using methods from algebraic topology, we characterized highly non-random structures in the resulting predicted synaptic connectivity in the form of all-to-all connected, directed motifs. When we simulated the electrical activity of the modeled neurons we found that the topologically identified motifs led to correlated neuronal activity, thus furthering our understanding of the link between synaptic structure and neuronal function. Running the model on the MIRA system at Argonne National Labs also allowed us to study how neuronal activity in turn re-shapes connectivity through mechanisms of synaptic plasticity, thus completing a loop from structure to function and back again.